Are YouTube's algorithmms helping to divide us? In this blog post I take a look at YouTube's new reputation as the number one tool for radicalisation.

opinion | social media | data | politics

As the world's richest men decide to use their increasing wealth to go off and colonize space, the rest of us mere mortals are left on Earth trying to get on with life as normal as the Coronavirus continues its death march across the globe. Covid-19 has highlighted what we already knew; the haves will always have and want more, while the have-nots will try to make ends meet as best they can. Then there is this strange middle group that has somehow grown louder and louder since the pandemic began. I can't think of a name for this group of people. A collective consisting of mostly white males from both the left and the right of the political spectrum, who believe that they, and only they, are enlightened enough to know the truth.

It's not just the Coronavirus that has managed to divide us. The internet has done a magical job of this too. The algorithms behind most of the major apps and websites we use to get our news are affecting our perceptions. The very social media platforms that were designed to bring us together are driving us apart by recommending posts that elicit emotional responses, even if this leads to the spread of potentially dangerous misinformation. As we scroll through social media, we are presented with a cherry-picked selection of news and/or posts, based on what we have previously viewed, liked, or shared. The algorithm behind this cherry-picking is been programmed to show us more of the things that it has decided we will be interested in, and things we are more likely to click on.

Based upon the various user profiles that we have spread across numerous platforms, these algorithms calculate our behaviour and interests. This can be anything from the information we share voluntarily, like our name and where we live, but it can also include information that has been surmised about us. Information that is linked to our online habits, such as the posts we like and share. Depending on the goal of the company behind the apps/websites we choose to get our news from, and depending on how the algorithm has been designed, we could be shown a well-balanced feed. But, unfortunately, this is rarely the case. For "news-based" sites like Facebook and Twitter, we will generally be shown more of what their algorithms have decided we like. The main aim of these companies is to generate clicks and likes - the more the merrier!

The posts we're shown could come from questionable sources or echo the same message we have seen time and time again, but as long as we keep clicking, liking and sharing, the algorithm will keep showing us these same types of results. This is how filter bubbles are created.

The YouTube Effect

The vast majority of us use YouTube to watch videos about dogs doing silly tricks, or to watch tutorials about how to do various DIY tasks around the house. However, YouTube has developed a reputation for driving users to the darkest corners of the internet, with some viewers, after watching a silly puppy video, being fed recommendations to videos with a more radical theme; or videos containing completely fake news. This is due to YouTube's recommendation algorithm, which, some argue, has turned the video platform into one of the most powerful radicalizing tools of the 21st century.

YouTube's recommendation algorithm accounts for more than 70% of all the time users spend on the site - it's a great success for them!

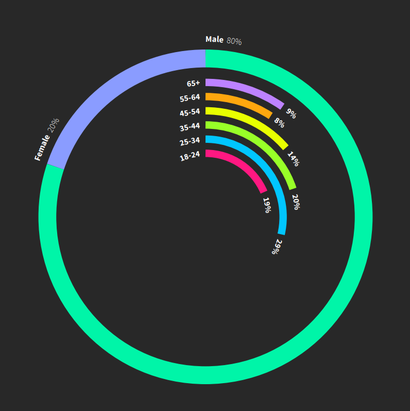

YouTube has 2 billion active users per month, over 500 hours of video uploaded every minute, and is estimated to have the second highest traffic of any website after Google, its parent company. 94% of Americans aged 18-24 use YouTube - more than any other online service.

“Adolescents tend to believe that YouTubers providing information-based content are more authentic because they are ‘genuine’ people talking about their ‘real-life experiences’. This is particularly true for videos produced by YouTubers with a comparatively low number of followers. The appeal and credibility attributed to YouTubers by the adolescents surveyed increased in step with their perceived authenticity."

There is a worrying number of people who have fallen down the YouTube rabbit hole; finding a place to belong in the alt-right, anti-vaccination, anti-government, incel, alternative health movements that are particularly active on the platform. The types of movements promoted and supported by ex-president Donald Trump, who believe that Western civilization is under threat from Muslims, cultural Marxists, Jews and feminism. The supporters and creators of these very popular videos appear to fit a pattern - young, aimless men, often with an interest in playing video games. They visit YouTube to innocently watch a video game walk-through or for gaming tips, and are seduced by the community of creators with far-right and other questionable ideologies. A combination of social, emotional and economic factors can often lead to these young men getting hooked on this extreme content, and they eventually become indoctrinated.

Does this sound too simplified an explanation? Who has really been indoctrinated like this? Let's take a look: YouTube's stated goal was to reach 1 billion hours of viewing per day. A goal they'd hit by October 2016. Then, along came the Trump/Clinton election, and questions about YouTube's lack of control over extremist content, misinformation and nauseating kid's videos began to arise.The site had quickly worked out that outrage equals attention, and the far-right content creators took full advantage of this fact. The engineers decided to reward creators who made videos that users would watch until the end, also allowing them to show ads alongside their videos, earning these creators a share of the revenue generated. This change was very successful and the far-right was in the right place at the right time. Many of the alt-right creators on YouTube already created long video essays or videoed their podcasts. Now that they could earn money from their content, they were incentivised to churn out as many videos as possible.

In 2018, Becca Lewis released a report that warned of the growing trend in far-right radicalisation on YouTube. In 2019, a white supremacist killed 51 people at the Al Noor Mosque in Christchurch, New Zealand. He was found to have been radicalized on YouTube.

Followers of these alt-right YouTube video creators were many of the main players in the Capitol Riot on 6th January 2021 and the graph above shows the impact that social distancing and the Coronavirus lockdowns had on search traffic for white supremacist content.

"By analyzing more than 72 million YouTube comments, the researchers were able to track users and observe them migrating to more hateful content on the platform. They concluded that the long-hypothesized "radicalization pipeline" on YouTube exists, and its algorithm speeded up radicalization."

In 2015, Google's AI division began developing a new algorithm that could engage users for longer. This new AI is known as Reinforce and predicts a users engagement and attempts to expand their tastes; the intended goal is to get users' to watch many more videos and was another huge success for YouTube.

Once again, this change to the recommendation algorithm benefitted the far-right creators who were already masters of keeping people watching their videos.

Can Anything Be Done To Stop Online Radicalization?

A small, but growing group of left-wing YouTubers have started to try to bridge the gap and entice viewers away from the alt-right content they see. Using a kind of algorithm hijacking, they produce videos that address many of the same subjects as those uploaded by the far-right creators, and their videos are recommended to the same users.

Jumping from watching videos created by the far-right to watching videos created by far-left creators, could be considered a sort of "victory", but essentially it simply highlights how easily our political views are built around various internet platforms and the things their algorithms decide to show us.

All of the social media sites can and should have been quicker off the mark when it came to controlling the content put up by their users. Making and enforcing changes to their terms of service; changing the algorithms to help prevent viewers falling down the extremist rabbit holes; fine-tuning their content moderation algorithms to catch borderline and extreme content more quickly and effectively; and a million other things.

As long as there is no clearly-defined definition of hate speech, harassment or extremism, then the actions the social media giants take have little or no effect in tackling the rise in extremism online. By claiming to remain apolitical, these platforms consciously and consistently make the political choice not to protect vulnerable groups.

When clicks are considered more valuable than an individual's safety, when profit and data collection is the end goal, and when governments have no real inclination or understanding of how to get these tech giants under control, then misinformation will continue to spread and radicalisation through these online platforms will continue to happen.

Teach critical thinking to kids at school; help citizens understand the importance of keeping and protecting press freedom; don't get ALL of your news from YouTube; look at different media sites to get a more balanced view and learn how to tell which publications can be trusted.

Essentially, as the viewers, likers and sharers of their posts, etc, the power lies with us. If we take our eyes and our clicks elsewhere, things will change pretty quickly when Google and their cohorts start to lose access to our precious data. Play them at their own game.

Write a comment